In this AI series first post we are going to create Generative AI Locally using Spring AI with Ollama and Meta's llama3

What is Spring AI ?

Spring AI is an application framework for AI engineering. Its goal is to apply to the AI domain Spring ecosystem design principles such as portability and modular design and promote using POJOs as the building blocks of an application to the AI domain.

At its core, Spring AI provides abstractions that serve as the foundation for developing AI applications. These abstractions have multiple implementations, enabling easy component swapping with minimal code changes. For example, Spring AI introduces the ChatClient interface with implementations for OpenAI and Azure OpenAI.

What is Ollama?

Ollama is a streamlined tool for running open-source LLMs locally, including Mistral and Llama 2. Ollama bundles model weights, configurations, and datasets into a unified package managed by a Modelfile. Ollama supports a variety of LLMs including LLaMA-2, uncensored LLaMA, CodeLLaMA, Falcon, Mistral, Vicuna model, WizardCoder, and Wizard uncensored.

Ollama supports a variety of models, including Llama 2, Code Llama, and others, and it bundles model weights, configuration, and data into a single package, defined by a Modelfile.

Meta developed and released the Meta Llama 3 family of large language models (LLMs), a collection of pretrained and instruction tuned generative text models in 8 and 70B sizes. The Llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks. Further, in developing these models, we took great care to optimize helpfulness and safety.

Prerequisites To complete this example:

- An IDE

- JDK 11+ installed with JAVA_HOME configured appropriately

- Gradle 8+

- Ollama

Let's Start :

Step 1: To install Ollama tool locally to your machine Go to https://ollama.com/

Here I am using Windows 11. So I am going to install the Ollama windows version. Once it get install successfully Open the Command Prompt and just type ollama help it will provide all the details.

You can directly pull a model from (Ollama Models) and run it using the ollama cli, in my case I used the llama3 model:

To list Models

Now Lets see how we can use locally run LLM model llama3 in Spring Boot Application and Run using Rest APIs

Step 3:

Create a Spring Boot Project using https://start.spring.io/

Add dependencies to build.gradle

Next, Create a simple ChatController where we have used OllamaChatModel class: ChatModel implementation for Ollama. Ollama allows developers to run large language models and generate embeddings locally. It supports open-source models available on [Ollama AI Library](https://ollama.ai/library). - Llama 2 (7B parameters, 3.8GB size) - Mistral (7B parameters, 4.1GB size) Please refer to the official Ollama website for the most up-to-date information on available models.

Start the Application

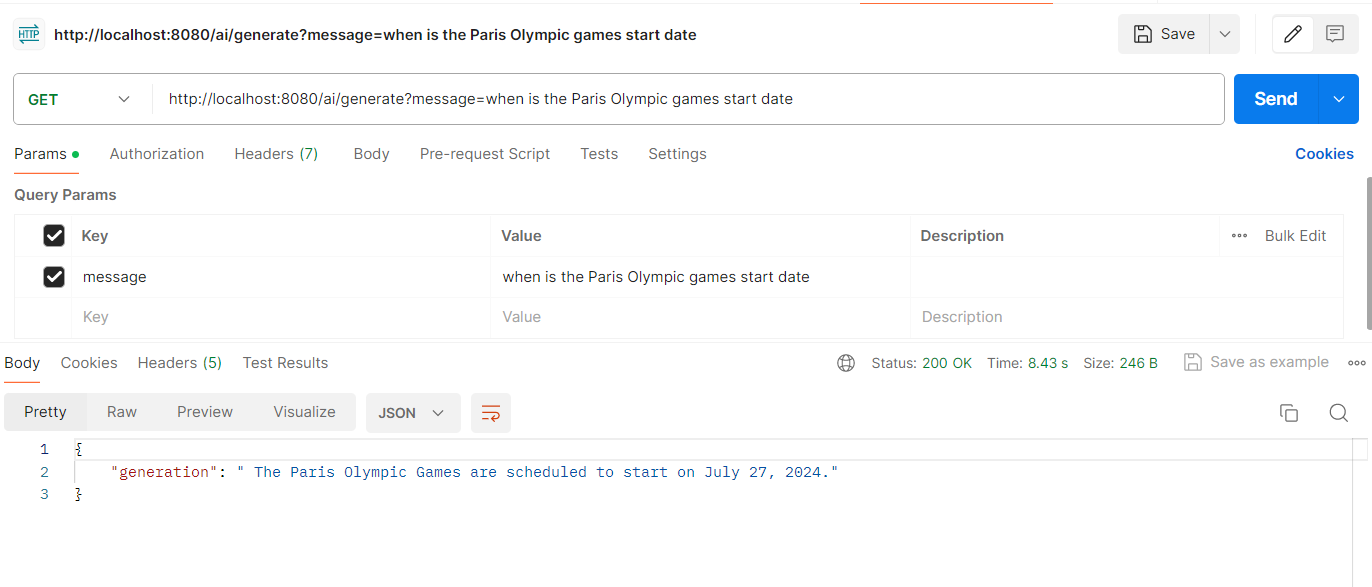

Now its Time to Test our locally Created Generative AI using Spring AI with Ollama and Meta's llama3 through Rest APIs,

Here we will POSTMAN to send the message and get the Responses from our locally run LLM model llama3 in ollama tool.

Summary:

So in this Example we have seen and implemented how can we Create Generative AI Locally using Spring AI with Ollama and Meta's llama3 through Rest APIs in very simple steps.

GitHub Link : rajivksingh13/teachlea

Please feel free to provide your valuable comments, Thanks !!

1 Comments

Nice one dude ....

ReplyDelete